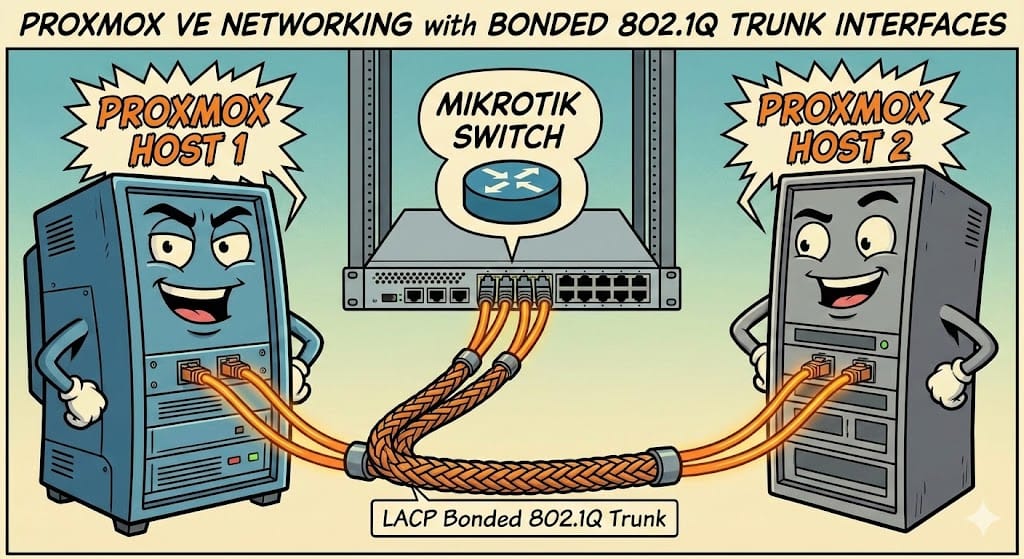

Proxmox VE Networking with Bonded 802.1Q Trunk Interfaces

As a network engineer, my homelab is designed to behave like a small enterprise environment rather than a simplified test bench. That means trunked VLANs, link aggregation, predictable traffic distribution, and clean separation of responsibilities between the hypervisor and the network. Proxmox VE is well suited for this approach because its networking model maps directly to standard Linux constructs used in production.

At the core of this design is an 802.1Q trunk carried over a bonded uplink, allowing multiple VLANs to traverse redundant physical interfaces into the hypervisor.

What Is Bonding and LACP?

Bonding is a networking technique that combines multiple physical network interfaces into a single logical interface. From the operating system’s perspective, the bonded interface behaves like one NIC, while under the hood it provides link redundancy, increased aggregate bandwidth, or both. Bonding is commonly used on servers and hypervisors to eliminate single points of failure and to better utilize available network capacity without changing application or IP-layer configurations.

LACP (Link Aggregation Control Protocol) is a standards-based bonding method defined by IEEE 802.3ad that allows multiple physical links to be grouped into one logical link in coordination with the connected switch. LACP dynamically negotiates which links participate in the bundle and monitors link health, providing both fault tolerance and load distribution. Because it is interoperable across vendors and widely deployed in enterprise networks, LACP is the preferred bonding mode when the switch infrastructure supports it.

What Is 802.1Q?

IEEE 802.1Q is the Ethernet standard for VLAN tagging. It allows multiple isolated Layer-2 networks to share a single physical link by inserting a VLAN identifier into each frame. In enterprise networks, 802.1Q trunks are used extensively between switches, firewalls, hypervisors, and storage systems. Using VLAN trunks in a homelab allows realistic segmentation without consuming additional NICs per network.

Architecture Overview

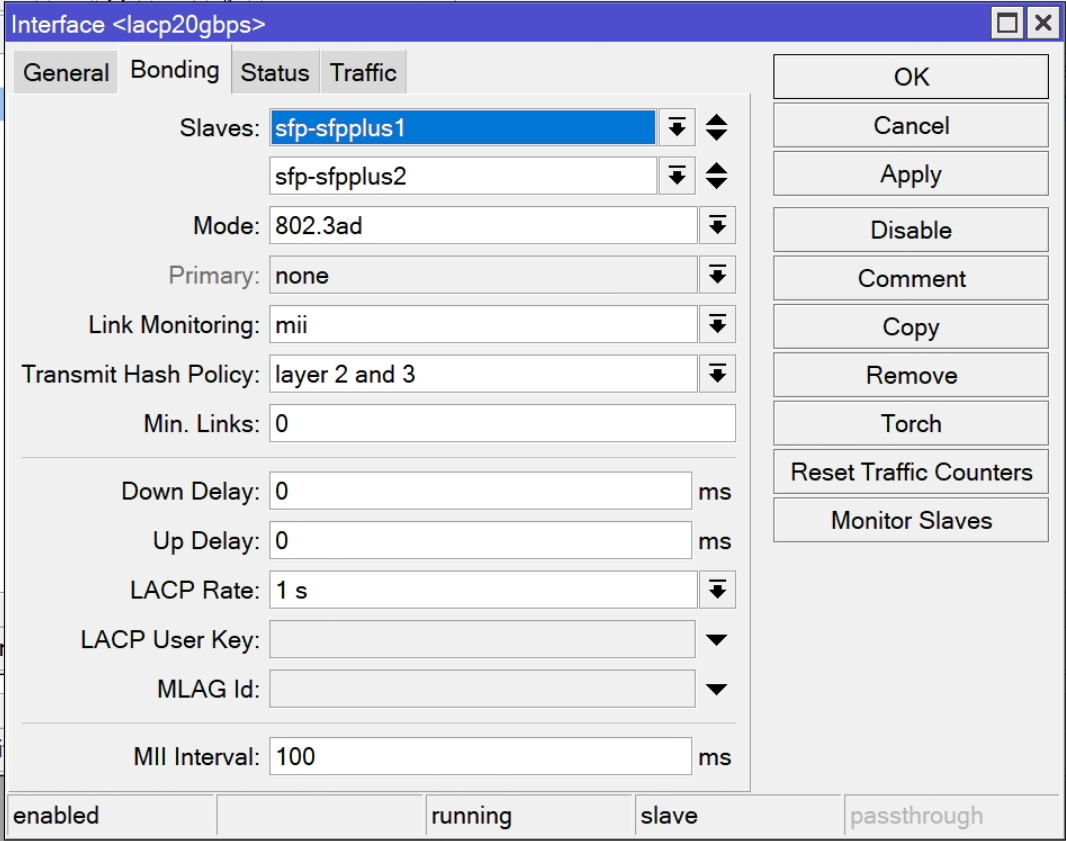

Two physical NICs on the Proxmox host are bonded using LACP and connected to a MikroTik switch configured as an 802.1Q trunk. All required VLANs are passed into Proxmox over this bond and terminated on a single VLAN-aware Linux bridge.

Logical flow:

[MikroTik Switch]

LACP + 802.1Q Trunk

|

enp1s0 enp1s0d1

\ /

bond0

|

trunk interface

|

VMs / Containers (Tagged)

The Proxmox host itself participates only in the management VLAN. All other VLANs are consumed exclusively by workloads (VMs and LXCs).

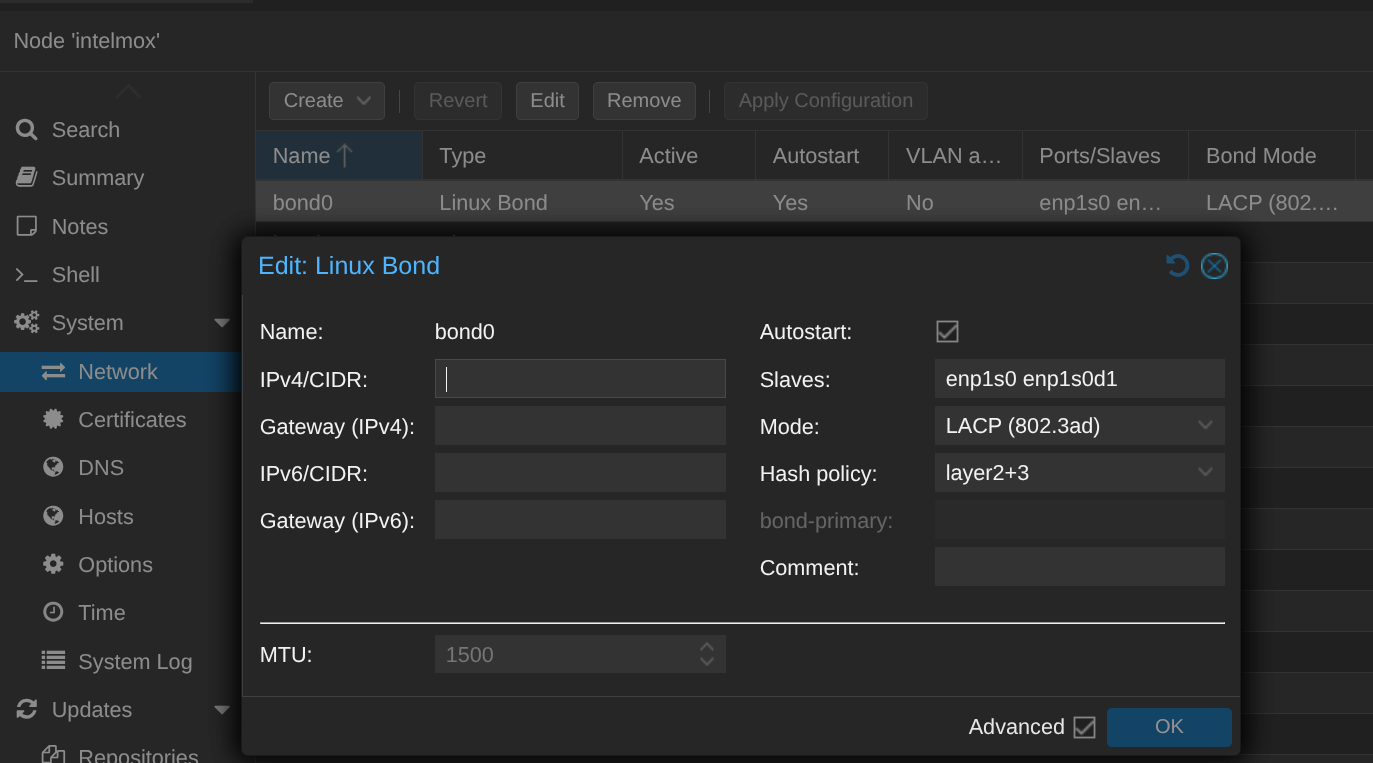

Proxmox Network Configuration

Below is a simplified network config file for Proxmox VE Debian shell /etc/network/interfaces

auto enp1s0

iface enp1s0 inet manual

#10Gbps PHY

auto enp1s0d1

iface enp1s0d1 inet manual

#10Gbps PHY

auto bond0

iface bond0 inet manual

bond-slaves enp1s0 enp1s0d1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

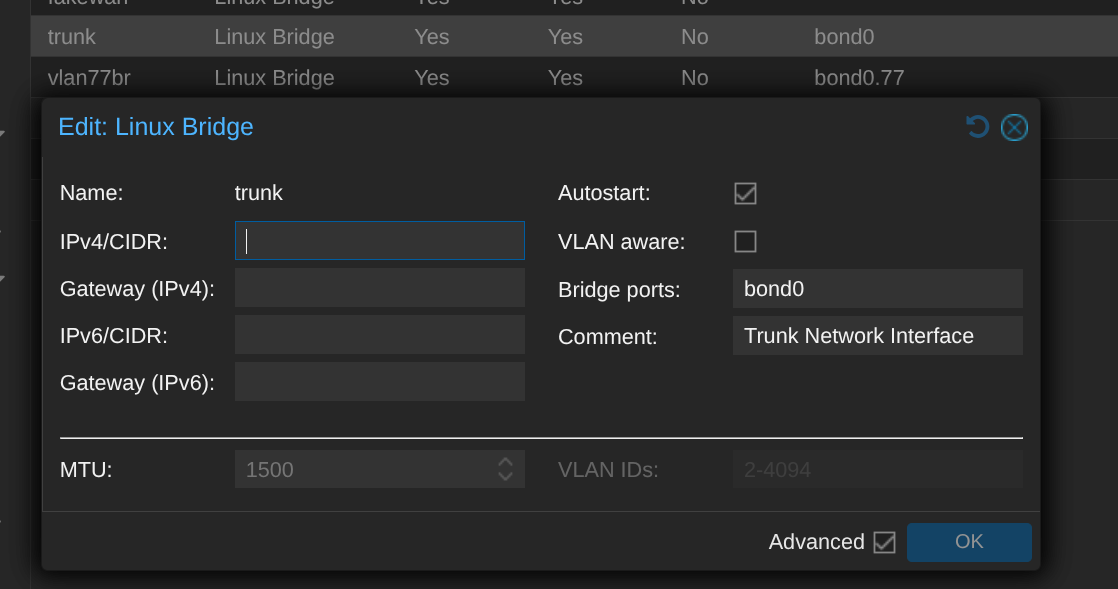

auto trunk

iface trunk inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

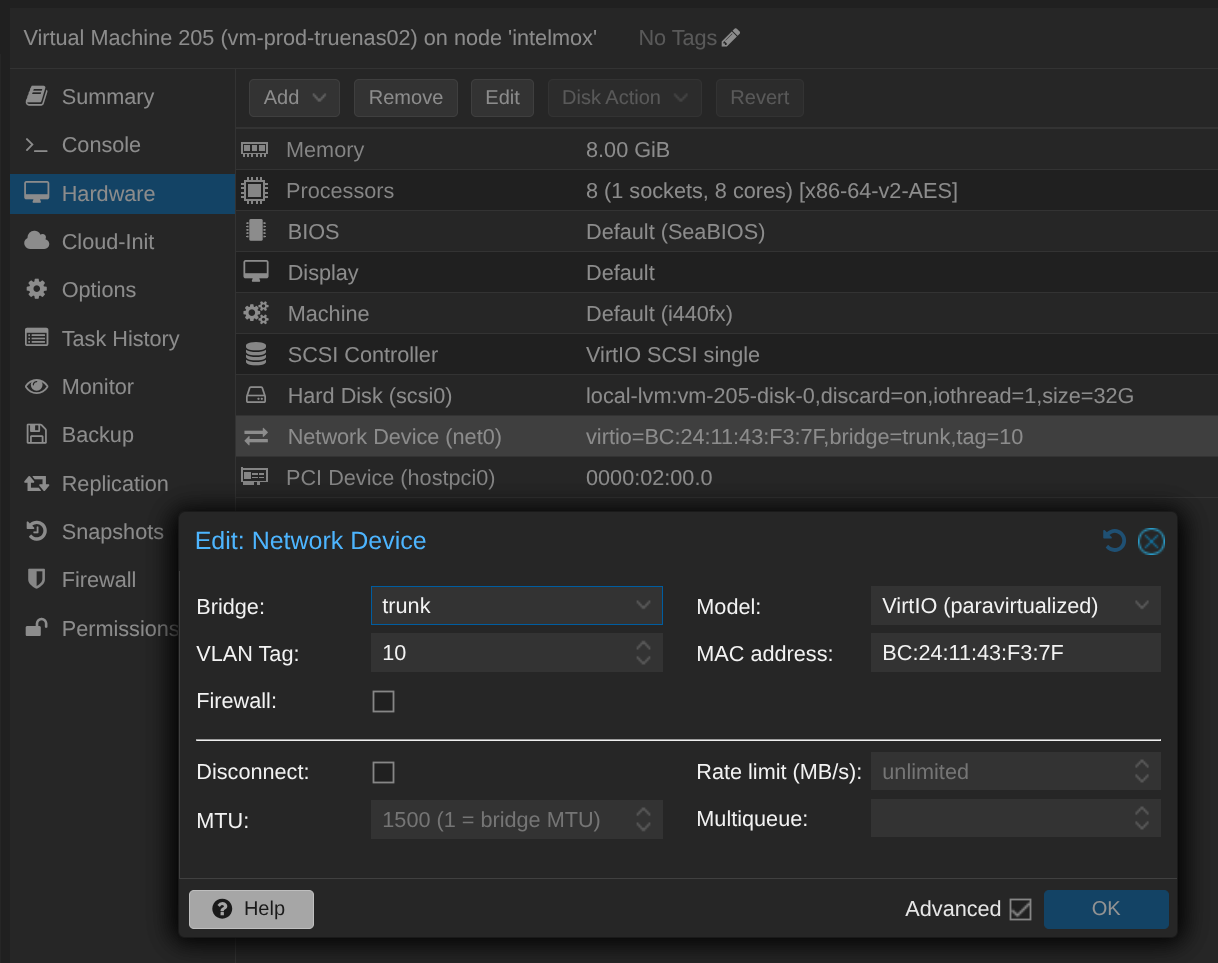

#Trunk Network InterfaceEach VM or container is assigned a VLAN tag on its virtual NIC, making it behave exactly like a server connected to a trunk-capable access port in an enterprise network.

Bonding Modes Explained (and Why LACP Is Used)

Linux bonding supports several modes, each with different trade-offs. In a homelab intended to replicate enterprise behavior, choosing the right mode matters.

Common Bonding Modes

active-backup (mode 1)

- One active NIC, one standby

- No switch configuration required

- Provides redundancy but no load sharing

- Often used for simple or unmanaged switches

balance-rr (mode 0)

- Round-robin packet distribution

- Can cause packet reordering

- Rarely recommended in modern networks

balance-xor (mode 2)

- Traffic distributed based on hashing

- Requires static link aggregation on the switch

- Less flexible than LACP

802.3ad / LACP (mode 4)

- Standards-based link aggregation

- Requires switch support

- Provides redundancy and load distribution

- Preferred in enterprise environments

Why LACP with Layer 2+3 Works Best on MikroTik

In this setup, LACP (802.3ad) is used with a layer2+3 transmit hash policy. This combination works particularly well with MikroTik switches for several reasons:

- Standards-based interoperability

MikroTik’s bonding and LACP implementation adheres closely to IEEE standards, making it reliable with Linux bonding. - Predictable traffic distribution

Layer 2+3 hashing uses source/destination MAC and IP addresses, which works well for VM-heavy environments where many hosts communicate with multiple endpoints. - Stable behavior for VLAN trunks

VLAN-tagged traffic remains consistently hashed, reducing the risk of uneven load or microbursts on a single link. - Enterprise parity

This is the same hashing strategy commonly used on Cisco, Juniper, and Arista switches, making the homelab experience transferable to production networks.

On the MikroTik side, the corresponding configuration typically uses:

mode=802.3adtransmit-hash-policy=layer-2-and-3- A bridge or interface configured as an 802.1Q trunk

Scaling This Design to Multiple Proxmox Hosts

This architecture scales cleanly across multiple Proxmox nodes:

- Identical VLANs on every host

VLAN IDs are consistent cluster-wide, enabling seamless VM migration. - Uniform bonding and bridge design

Every host uses the same bond and bridge naming (bond0,trunk), simplifying automation and documentation. - One port-channel per host

Each Proxmox node connects to its own LACP bundle on the MikroTik switch. - Migration-ready networking

Because all VLANs are available everywhere, live migration works without network reconfiguration.

Adding new VLANs becomes a purely logical operation: update the switch trunk, allow the VLAN on trunk(in case your config is vlan aware), and tag workloads accordingly.

Conclusion

Using a bonded 802.1Q trunk with LACP in Proxmox VE allows a homelab to operate like a small enterprise data center. By combining VLAN-aware bridges with standards-based link aggregation—especially LACP with layer 2+3 hashing on MikroTik switches—the environment gains resilience, scalability, and realism. This approach avoids lab-specific shortcuts and provides hands-on experience that directly translates to production networks.